As it turns out, right around the time we were talking to the people from Dell in Palo Alto, Google was tackling the same problem, just not with finance but instead for search. Google had looked at what was happening in their core business and tapped into two of the best computer scientists in the world, John Hennessy and David Patterson, to help them. For those of you that have ever had a computer architecture class you will know them as “Patterson and Hennessy,” the John Lennon and Paul McCartney of computer architecture. Not only the authors of the definitive work on the subject Computer Architecture: A Quantitative Approach, they are also the primary advocates of RISC architecture, the architecture utilized in over 90% of the world’s computing devices. The research effort resulted in Google’s TPU-centric architecture, something that almost single-handledly ensured Google’s dominance in search for the next decade. While much has been said about the TPU, it is a specific integrated architecture for a specific problem that many are attempting to adapt to other problems. The first version of the TPU is presented below.

The Coming Golden Age of Computer Architecture

We will leave the TPU for the subject of another entry and how it could possibly be used in finance, but instead, I will turn to Hennessy and Patterson’s Turing lecture, given in Mid-2018. entitled A New Golden Age for Computer Architecture: Domain-Specific Hardware/Software Co-Design.

There is really too much in this talk to quickly summarize, but among the things they say is that the free ride that programmers have taken by ignoring hardware is now at an end and groups will have to start addressing hardware and software together, exactly as we have done with the systems we built. The need for this is imperative and they say that we will enter a Golden Age for Computer Architecture because we will have to innovate with hardware and software together or systems will crash and companies will go bankrupt. The reason for this is simple.

While Moore’s Law is ending, Big Data continues to get Bigger

For the last three human generations (60 years), we have operated in a world that has assumed that computing will decrease in cost and increase in power commensurate with Moore’s Law. The fact that Moore’s Law has ceased has not changed the way that we produce or consume data and that way is in a constantly increasing trend–hence, “Big Data” is the new oil of the information economy. In short, our entire economy is based upon the underlying idea that computing will increase in power and decrease in cost, so we can continue to refine our ever-increasing data into actionable information. However, under the current paradigm of separated hardware and software systems, this will not occur.

To put these current numbers in context, historically, processors improved somewhere between 30-50% a year in speed and now they are only improving at 3% a year. While some believe that our salvation lies in Quantum computing, Patterson and Hennessy state appropriately that this is at least ten to twenty years away from providing a viable alternative to our current computing methods. That simply leaves us with a situation where we have to challenge our systems to become faster, or in other words, to strip out the slack in our current software and hardware systems architectures.

Integrated Architecture Can Save Us

Now, let’s discuss this with real numbers. Assume that we have to effectively double our processing speed for the next 15 years simply to keep up with the demand embedded in our “Big Data refining” system and bridge us to the time when Quantum computing becomes viable. That would mean that we would have to double our speed by over 10 times (15 years/average doubling time of 1.5 years), which means that we would have to wring an improvement of 1,000 times out of our current systems (2^10)!

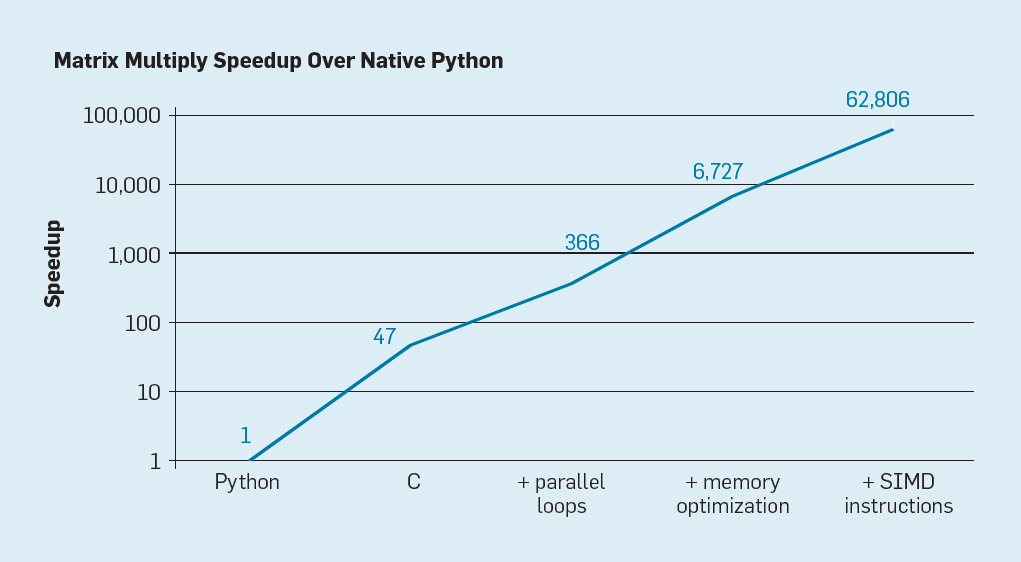

While most non-computer experts believe that to wring a 1,000 times increase out of current architectures is impossible, Hennessy and Patterson cite a study from a group of researchers at MIT that prove that for Matrix operations they can speed up the process over 62,000 times from a base, inefficient, hardware-agnostic implementation in Python! This result is presented below:

The Problem with Python, Again

So this begs the question, what is the most used language in finance today? Of course, as I’ve already pointed out, it’s hardware agnostic Python, great for programmers’ ease, but bad for processing speed! This is one of the reasons that all those billion-dollar large scale Python implementations I mentioned earlier in this blog have had problems.

Our solution at the ULISSES Project is not to get financial users to stop using Python, but instead to adapt the technologies to the users. In this case, it means to code up the central, heavy lifting applications in a strict-object oriented language that compiles into C and is adapted to use the full capabilities of a customized hardware platform, optimized for domain-specific solutions. In this way, the hardware and the software together can in effect deliver “appliances” that can be called from Python, R, or even Excel if needed. This is exactly how the $150 billion Gaming Industry does it with Game Engines.

So assume that we can continue to move forward with Domain-specific architectures, where does that leave us? I believe it brings us to an AI and ML-enabled world with more possibilities than we could previously imagine. So what might the world look like? I’m not sure, but I think Neal Stephenson has some good ideas and I’ll start referencing those in the next blog entry.